With our free Joomla SEO extension called Meta data plugin you can add noindex, nofollow and other robots meta tag(s) to your specified pages in bulk, thereby you can get rid of these ugly non-sef URL parts and URL query parameters easily from Google SERP.

As a result of the proper configuration of the plugin the meta tags will change depending of the url:

Configuration

- Status

- In order to make your rules work, you have to enable them.

- URL inspection

- Your page (url) will be inspected based on the selected method that can be: Detailed explanation about url query parts and parameters.

- URL inspection: URL query parameter(s)

- URL query parameters always follow the "?" symbol. Multiple parameters can be included in a URL if they are after the "&" symbol. The plugin do not inspects query values, only query parameters. If there are more query parameters after the "&" symbol they can be inspected too.

If you assign more query parameters for one rule separate them with the following character:|

Common URL query parameter examples for noindex, nofollow SEO signaling:tmpl|id|Itemid|phocaslideshow|do_pdf|buttons|detail|task|type|pop|option|catid|view|action_object_map|keyword|language

- URL inspection: URL part(s)

- URL parts mostly between slashes like: /68-uncategorized/ or /virtuemart/ etc.

If you use more parts for one rule separate them with the following character:|

For example:68-uncategorized|virtuemart

Common URL parts to be assigned for noindex, nofollow SEO signaling:virtuemart|component|by,mf_name|detail|68-uncategorized|

In some cases there is a need for inspect the end of the url. In this case if the setting at the Global configuration ➙ Add Suffix to URL is turned to Yes you need to use .html for the part. Example here. - URL inspection: Regex

- You can assign URLs to rules by a REGEX rule. This is useful when some parts of the URL is a variant or has a pattern (like a pagination).

example.com/products/results,1321-1350

Let's say you have more paginated URLs like in the example above, that are already indexed by Google, which is not beneficial. In this case you can use a specific regex syntax for the parts that include elements like this:results,1321-1350 results,76-135 results,1081-1140

The regex rule will be the following for this example:results,\d*-\d*

or/results,\d*-\d*/

- URL inspection: ALL

- If URL inspection is set for "All", the plugin will not inspect URLs specifically. The rule configurated here after selecting "All" option will be applied for the whole site. However you can combine "ALL" rules with other rules. In that case the execution order will be applied. More info about controversal rules.

- Meta name

- There are different meta names in the list but in terms of Google SERP these three are the important ones:

- robots

The robots meta tag lets you utilize a granular, page-specific approach to controlling how an individual page should be indexed and served to users in Google Search results. - googlebot

Google's standard web crawler is Googlebot. So to prevent only Google from indexing your page, you should select this in the rule. - google

The tag tells Google no to show the sitelinks search box. Which meta names Google supports. - Google Search Central Docs

Robots meta tag allow you to instruct basically every kind of search engines on how you want them to handle the page. However, if you want to instruct specifically googlebots or Google on how they handle or index your pages, you can use googlebot or Google meta names.

- robots

- Meta content

- You can select the following meta contents from the list:

- noindex, nofollow

- noindex

- nofollow

- noindex, follow

- index, nofollow

- notranslate

- nopagereadaloud

- custom

Other points to note

Controversial rules

If you have more than one rule in your configuration, you may apply the rules multiple times. However it depends on the rules of course. You may end up with the following meta::

<meta name="robots" content="index, follow, noindex, nofollow">

There are several chance to get this. For example, for one of the rules you set a URL part for "noindex, nofollow", in the other rule you set "index, follow". Be careful when you use the rules, always check the results of your config in the plugin. (In this particular case Google takes into account the "noindex, nofollow" tags.

The plugin overrides the global configurations

It means that if your site is set for "index, follow" globally, you can still easily set the entire site for "nofollow, noindex" within the plugin. All you have to do is to make a rule, select "All" at URL inspection and set "robots" metaname for "noindex, nofollow". This can be made easily by mistake, so always check the results.

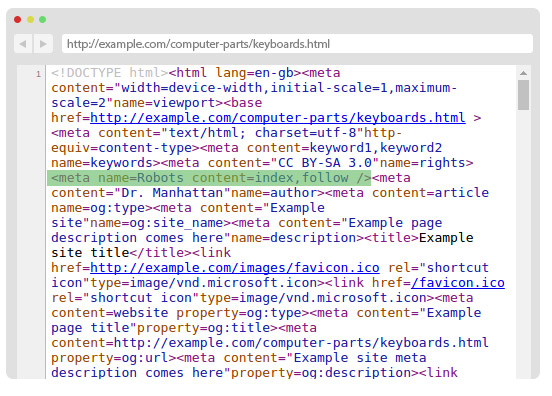

Using .html suffix at URL parts

If your URL contains .html suffix as well at the end of the URL, you have to use the part along with this .html suffix when you type it into the URL part(s) field, like this:

This is the URL:

/computer-parts/keyboards/17-computer-parts.html

So you type it like this:

17-computer-parts.html

Use case

We fixed a sudden traffic loss SEO problem for on one of our client and for some parts we used this extension. For better understanding we made a Use case blog post about it.